The aim of an ASIC / ASV system (as Mnova Verify) is to evaluate analytical data and to make a judgment as to whether it is compatible with the structure proposed by the user. In this topic, we will not discuss the process of acquisition of the analytical data, where we lack expertise, but will be starting from the point where raw analytical data has been collected by the instrument/s and is therefore available for evaluation / analysis.

This first article on this subject will be high level and not detailed, and a series of articles will follow on the specifics of each step of the workflow or process, for those readers who are interested in more detailed information.

At a high level, there are several principal components or elements which are necessary in an ASV system which aims to confirm the structure of a sample by means of analytical data. Different approaches or strategies can be deployed to fulfill each one of these elements, but these must be there for the system to achieve its purpose. These integral components are:

Integral components of any ASV system

- Ability of the system to import or parse raw or processed experimental data.

- Interpretation of the experimental data (processing and analysis)

- Generation of ´theoretical data´ on the basis of the proposed molecular structure to be compared with the experimental data (this theoretical data may be actual predicted spectra, which is the approach typically deployed to this problem, or could potentially be a set of descriptors or the expected data).

- Use of an algorithm or series of algorithms to compare experimental with theoretical data.

- Presentation of the results of such comparison.

- Potential integration with other systems and workflows in the organization where the ASV / ASIC system is deployed.

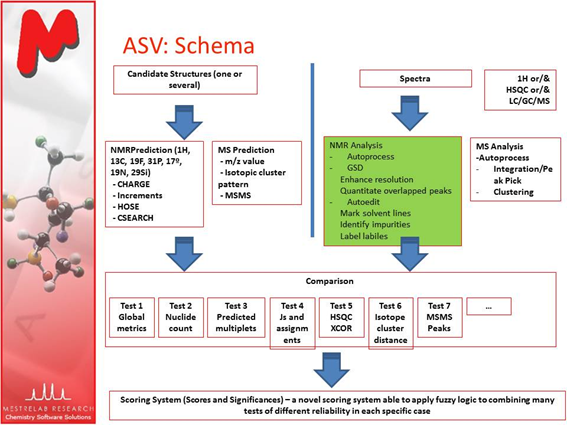

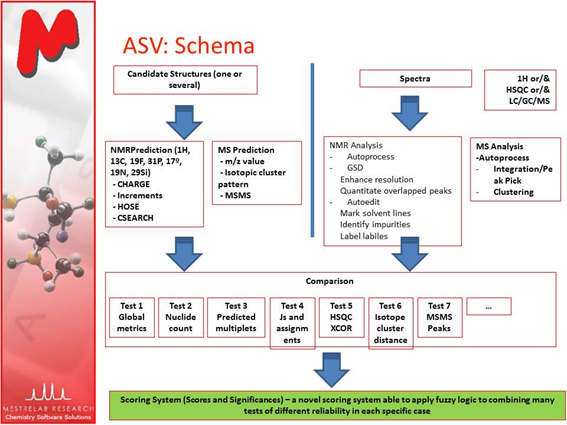

The rest of this article will look, in some more detail, at the approach or strategy followed by Mestrelab Research in the development of our ASV / ASIC system, Mnova Verify. This strategy is illustrated by figure 1. This description is intended as an illustration and example of generic approaches to this problem.

Structure proposals

In the Mestrelab ASV system, the user of the system can propose one or several structures for each set of spectra. Proposing one structure will result in an evaluation of the compatibility of that structure with the analytical data, whilst proposing several structures will result, as a consequence of the individual evaluation of their compatibility with the analytical data, in a ranking of the structures as to which one is the most likely candidate given the analytical data provided. Structures can be proposed to the system by drawing them in a multitude of widely available structure drawing packages (ChemDraw, IsisDraw, SymixDraw, ChemSketch,, etc.) or by opening a MOL or SDF file into the system.

Analytical data

As with any system of this type, the Mestrelab ASV system needs to import, process and analyze raw experimental data, in order to derive from it the information necessary to allow a comparison to theoretical data generated by the system, in order to evaluate the compatibility of the experimental data provided with the theoretical data generated from the structure proposal/s. The wide applicability of any such system will depend on the variety of data types supported, as most users of this kind of system will have situations in which hardware and data systems from different vendors cohabit in the same laboratory.

Our system therefore reads in, and processes:

- NMR data from Agilent, Bruker, JEOL and a number of other hardware manufacturers, as well as standard exchange formats such as JCAMP [1].

- LCMS or GCMS data from AB Sciex, Agilent, Bruker, JEOL, Thermo, Waters and a number of other hardware manufacturers, as well as standard exchange formats such as JCAMP [2], mzData [3], mzXML [4] and NetCDF (http://en.wikipedia.org/wiki/NetCDF - ^ ASTM E1947 - 98(2009) Standard Specification for Analytical Data Interchange Protocol for Chromatographic Data).

For an ASV system to work reliably in full automation, it is necessary to optimize all the analytical data processing and analysis steps, typically performed by a chemist when interacting with the data, without any kind of user interaction. Therefore, a set of algorithms need to be developed to carry out fully automatically a set of necessary processing and analysis steps:

NMR Data

For NMR data, and not comprehensively, as this will be discussed in detail in a further article, the software must effectively identify the pulse sequence and experimental conditions and apply the correct window or apodization functions, zero filling and Fourier Transform, phase and baseline correction, advanced peak picking, integration and multiplet analysis (the latter steps often needing of deconvolution). In addition, the software must be able to successfully identify and ignore solvent signals (experimental solvents and synthetic solvents which may remain from previous synthesis steps) and impurities.

LCMS or GCMS data

For LCMS or GCMS data, and not comprehensively, the software must be able to effectively identify acquisition parameters such as ionization mode, mass accuracy, etc., and read in the data, peak pick and integrate chromatographic traces (TIC, UV/DAD/PDA, ELSD, CLND, etc.), subtract backgrounds, peak pick and cluster peaks to form isotopes, read in and peak pick MSMS or higher order MS traces, etc.

At this point in the process, a decision must be made as to not only which vendors to support, but also which analytical techniques and experiment types to include in the automated analysis. Whilst there is an argument to use any data type possible, as every experiment, or most experiments, add relevant information, an important consideration in this decision is the viability of experiment acquisition in the context in which an ASV/ASIC system is to deployed, that is to say, in a typically fairly high throughput environment. Therefore, the system must work reliably by using solely routine experiments such as 1H NMR, HSQC and LCMS or GCMS (in most cases, it is not viable to expect users of the system to run additional, time consuming experiments such as HMBC, 13C-13C INADEQUATE, etc.). Ideally, and in order to support as many applications as possible for an ASV/ASIC system at an organization, the system should have the flexibility to work with different combinations of data types. The Mestrelab system, for example, can work with any of 1H, HSQC, LCMS or GCMS, and with any combination of these data types.

Generation of theoretical data

Once the structures have been imported in the system, it is necessary to generate theoretical data ready for comparison with the parsed, processed and analyzed experimental data. In the case of our system, it is necessary to predict:

- 1H NMR, and for this purpose a combination of 2 methods is used, CHARGE, developed by Prof Ray Abraham, at University of Liverpool, and an Increments method, developed by Prof. Erno Pretsch at ETH Zurich. For more detail on these methods, see further reading [5] to [53].

- 13C NMR, which relies on the work of Prof Wolfgant Robien, at University of Vienna, and uses a combination of HOSE code based predictions with a neural network designed to handle situations where the HOSE codes presented to the software are not represented in the software´s database. For more information on these methods, see further reading 28.

- Mass ion prediction, isotopic cluster prediction, fragmentation and MSMS prediction.

We will devote one or several articles later on in this publication to providing further details about these prediction approaches.

A further important aspect of ASV systems is the potential ability to train the prediction algorithmis underlying the system by adding experimental assignments. This can act as a self-feeding virtuous loop, as the ASV system will direct the analyst or chemist´s attention to potentially poor predictions, as a poor prediction can often result in a failure or a lower score for that verification and, on investigating such failure, the poor prediction can be identified and corrected.

Comparison of experimental with theoretical data

Once we are at the point where we have both theoretical and experimental data, the next step or challenge in an ASV workflow is to compare both, identify the differences and evaluate how important those differences are and whether they mean or do not mean that the structure proposed may be incorrect.

In a pharmaceutical industry setting, we think it makes sense to consider a pass those structures which are not only present in the sample, but which are the main species in the sample. The question we are answering is not ´Is my proposed structure here?´, but rather, ´Does my major species in this sample correspond to my proposed structure?´. This has implications on how the analysis is performed.

Comparing theoretical with experimental data is a fascinating subject, which can be approached in many different ways. Any successful comparison in the realm of analytical chemistry should, however, have some basic characteristics:

- It must be able to accommodate and combine different tests coming from different analytical techniques, as it is perfectly possible, and often the case, that some tests disagree with some others, even within the same analytical technique, and this is compounded by the use of different analytical techniques.

- It must be able to place an estimate of reliability on every test to handle situations where tests disagree.

- It must be fuzzy enough to account for the fact that the tests are performing in less than ideal conditions, as real life data poses many challenges, predictions can vary in accuracy, etc.

- It must be able to combine the results of different tests and techniques into a final conclusion, ideally a single value, which can allow the comparison of different results from different problems, and which can point the user to where problems may lie even when analyzing large volumes of data.

At Mestrelab, we have developed a novel scoring system to handle these challenges, which could be considered to be inspired in jury dynamics or game theory. This will be described in more detail on a later article in this publication.

Presentation/reporting of results

A final and very important step in an ASV system is the presentation of results. This must be flexible, as different users may have different needs when using ASV systems. An user may run a batch verification with a large library and have a need to get a bird´s eye view of thousands of results, whilst another user may have run one single verification and be interested in the detail which lead to the pass or the fail. For this purpose, and to ensure flexibility, the software package must be able to output results in multiple different formats, both for single and multiple analysis.

In all cases, it is also important to afford the scientist access to the analysis parameters and to allow reprocessing, re-analysis and re-verification of the data, to avoid full reliance on the fully automated verification system.

Forthcoming articles in this section will focus on different steps of the ASV process, as implemented into our system and our Mnova software. We will also aim to cover different applications and contexts in which ASV / ASIC may be used, discuss the data quality requirements for such a system, etc.

- [1] ANTONY N. DAVIES and PETER LAMPEN, JCAMP-DX for NMR, Appl. Spectrosc. 47(8), pp1093-1099, 1993

- [2] PETER LAMPEN, HEINRICH HILLIG, ANTONY N. DAVIES, and MICHAEL LINSCHEID, JCAMP-DX for Mass Spectrometry, Appl. Spectrosc. 48(12), pp1545-1552, 1994

- [3] Proteomics 7 (19): 3436–40.doi:10.1002/pmic.200700658

- [4] Nat. Biotechnol. 22 (11): 1459–66.doi:10.1038/nbt1031